Recently, the ChatGPT-led generative model has become a new hot spot in AI, with Microsoft and Google in Silicon Valley investing heavily in such technologies (Microsoft has a $10 billion stake in OpenAI, the company behind ChatGPT, and Google has recently released its own BARD model), while Internet technology companies in China, represented by Baidu and others, have also indicated that they are developing such technologies and will go live in the near future. In China, Baidu and other Internet technology companies have also indicated that they are developing such technologies and will go live in the near future.

The generative models represented by ChatGPT have a common feature, that is, they use massive data for pre-training, and are often paired with a more powerful language model. The main function of the language model is to learn from the massive existing corpus, and after learning, it can understand the user's linguistic instructions, or furthermore, generate relevant text output according to the user's instructions.

Generative models can be broadly classified into two categories, one is language-based generative models and the other is image-based generative models. The language-based generative model is represented by ChatGPT, whose language model can not only learn to understand the meaning of user instructions, e.g., write a poem, Li Bai style, but also generate relevant text based on user instructions after massive data training. This means that ChatGPT needs to have a Large Language Model, LLM, large enough to understand the user's language and to be able to have high-quality language output - for example, the model must be able to understand how to generate poetry, etc. This also means that a large language model in language-based generative AI needs a very large number of parameters to be able to do this kind of complex learning and remember so much information. ChatGPT, for example, has 175 billion parameters (using standard floating point numbers would take up 700 GB of storage space), which shows how "big" its language model is.

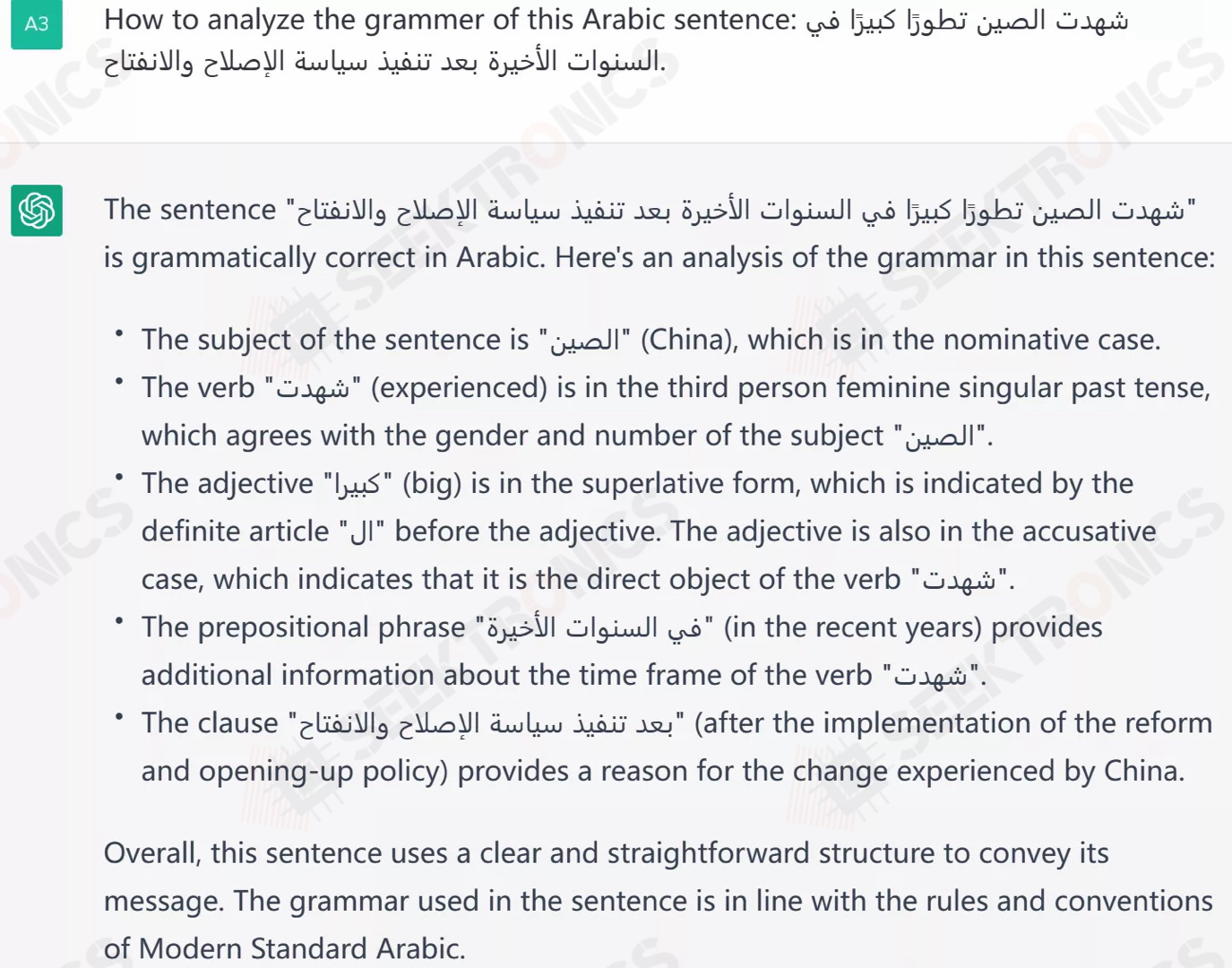

An example of ChatGPT generating responses

Another class of generative models is the image-based generative models represented by Diffusion models, typically Dalle from OpenAI, ImaGen from Google, and the most popular Stable Diffusion from Runway AI. These image-like generation models also use a language model to understand the user's linguistic commands and then generate high-quality images based on those commands. Unlike the language-based generation models, the language model used here mainly uses language to understand user input without generating language output, so the number of parameters can be quite small (in the order of a few hundred million), while the number of parameters for the image diffusion model is relatively small, in the order of a few billion overall, but the computational effort is not small, because the resolution of the generated images or videos can be very high.

An example of an image generated by an image generation model

Generative models can produce unprecedented high-quality output through massive data training, and there are already a number of clear application markets, including search, conversational bots, image generation, and editing, etc. More applications are expected in the future, which also puts demand for related chips.

The need for chips for generating class models

As mentioned earlier, ChatGPT represents a generative model that needs to learn from large amounts of training data in order to achieve high-quality generative output. In order to support efficient training and inference, generative models have their own requirements for related chips.

The first is the need for distributed computation; the number of parameters for language generative models such as ChatGPT is in the hundreds of billions, and it is almost impossible to use single-computer training and inference, but a lot of distributed computation must be used. In distributed computing, the data interconnection bandwidth between machines and the computing chip for such distributed computing (such as RDMA) has a great demand, because often the bottleneck of the task may not be in computing, but in the data interconnection above, especially in this kind of large-scale distributed computing, the chip for the efficient support of distributed computing has become more critical.

Next is the memory capacity and bandwidth. Although distributed training and inference are inevitable for language-based generative models, the local memory and bandwidth of each chip will largely determine the execution efficiency of a single chip (because each chip's memory is used to its limit). For image-based generative models, it is possible to put the models (around 20GB) all in the memory of the chip, but as image-based generative models evolve further in the future, it is likely that its memory requirements will also increase further. From this perspective, ultra-high-bandwidth memory technology represented by HBM will become the inevitable choice for related accelerator chips, while the generative class models will also accelerate HBM memory to further increase capacity and bandwidth. In addition to HBM, new storage technologies such as CXL coupled with software optimizations will also have the potential to increase the capacity and performance of local storage in such applications and are estimated to gain more industrial adoption from the rise of the generative class model.

Finally, in computation, both language-based and image-based generative class models have a large computational demand, and image-based generative models may have a much higher demand for arithmetic power as they generate higher and higher resolutions and move toward video applications - current mainstream image generative models have a computational volume of around 20 TFlops, and as towards high resolution and images, 100-1000 TFLOPS of arithmetic demand is likely to be the norm.

To sum up, we believe that the requirements of generative models for chips include distributed computing, storage, and computation, which can be said to involve all aspects of chip design, and more importantly, how to combine all these requirements together in a reasonable way to ensure that a single aspect does not become a bottleneck, which will also become a chip design system engineering problem.

GPU and the new AI chip, who has a better chance

Generative models have a new demand for chips. Who has a better chance to capture this new demand and market for GPUs (represented by Nvidia and AMD) and new AI chips (represented by Habana, and GraphCore)?

First, from the perspective of language-based generative models, GPU vendors that currently have a complete layout in this kind of ecology are more advantageous because of the huge number of participants and the need for well-distributed computing support. This is a system engineering problem that requires a complete software and hardware solution, and in this regard, Nvidia has combined its GPUs to launch the Triton solution, which supports distributed training and distributed inference, allowing a model to be divided into multiple parts and processed on different GPUs, thus solving the problem of too many parameters that cannot be accommodated by the main memory of one GPU. This solves the problem of too many parameters for one GPU's main memory. Whether you use Triton directly or do further development on the basis of Triton in the future, it is more convenient to have a complete ecological GPU. From a computational point of view, since the main computation of the language-based generation model is matrix computation, which is the strength of the GPU, the new AI chip does not have an obvious advantage over the GPU from this point of view.

From the point of view of image-based generation models, the number of parameters of such models is also large but one to two orders of magnitude smaller than the language-based generation models, in addition to its calculation will still be used in a large number of convolutional calculations, so inference applications, if you can do a very good optimization, AI chips may have some opportunities. Here the optimization includes a large amount of on-chip storage to accommodate parameters and intermediate calculation results, for convolution and efficient support of matrix operations.

In general, the current generation of AI chips is designed to target smaller models (number of parameters at the billion level, computation at the 1TOPS level), while the demand for generative models is still relatively larger than the original design target. GPUs are designed to be more flexible at the cost of efficiency, while AI chips are designed to do the opposite, pursuing the efficiency of the target application. Therefore, we believe that GPUs will still dominate such generative model acceleration in the next year or two, but as generative model designs become more stable and AI chip designs have time to catch up with generative model iterations, AI chips have the opportunity to surpass GPUs in the generative model space from an efficiency perspective.

-20x20-20x20.png)